Ecommerce websites typically distribute product listings across multiple pages to optimise load speeds and user experience. However, the choice of pagination method can significantly affect the website’s organic visibility. This guide aims to cover best practices for pagination based on experimentation to effectively boost your organic web traffic.

When it comes to dividing products across multiple pages to enhance the user experience, there are different types of pagination commonly used by ecommerce websites (mostly):

- Pagination: The preferred method of pagination according to 54% of UK’s top fashion retailers. Moving between pages by clicking on page numbers (e.g., 1, 2, 3 >>).

- Lazy Load (“Load more”): User scrolls to the end and clicks a button to load additional results.

- Infinite scroll: Results automatically load as users scroll down, creating an endless scrolling experience.

As well as: - All in one/no pagination: All results are displayed on a single page without pagination.

- Combo: Multiple pagination types used across the website, for testing or accessibility purposes such as pagination for non-JavaScript crawlers and infinite scroll for JavaScript users, or different sections using different pagination methods.

To ensure search engines can crawl and index all products, search engine friendly pagination is vital. It involves making the website’s pagination crawlable and indexable, regardless of the pagination method of choice. This becomes particularly important when only a fraction of the products are displayed on the initial category page.

A a brief history of Google’s Guidelines on Pagination

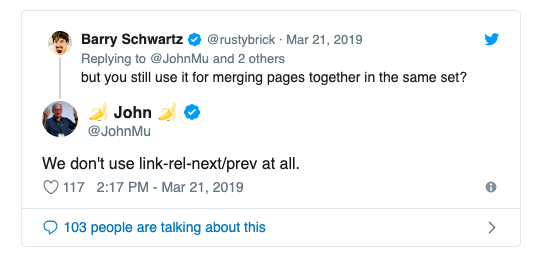

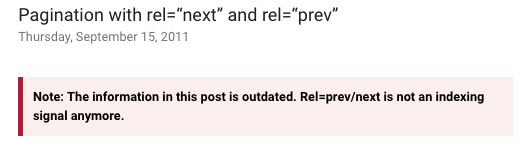

Since the official announcement of rel=next/previous deprecation on March 21, 2019 (despite reportedly not having used it for a while before that) SEOs have been waiting for some type of best practice guide on search engine-friendly pagination coming from Google themselves.

With no official Google pagination best practices published until September of 2022, and considering the heavy reliance on pagination by eCommerce sites, many of these sites had no choice between 2020-2022 but to make a decision on how they will be treating that aspect of their site.

Fast forward to 2024, despite these best practices being published, pagination continues to be the SEO Industry’s most debated and confusing topics, to an extent that I still see many retailers who have blocked the indexing of their pagination altogether.

Throughout the course of this article, my goals are to:

- Provide clear and simple pagination best practices – mostly for ecommerce, but many of the tips here apply to all industries looking for to maximise the number of listings they have on their site without cannibalisation or inflating their crawl budget

- Demonstrate the importance and impact of implementing search engine friendly pagination principles on an eCommerce site’s organic visibility through a case study.

- Link back to my report on the state of search engine friendly pagination practices in eCommerce from the top 150 fashion retailers in the UK, illustrating the size of the opportunity for small and large brands alike to get ahead in the game – the dos & don’ts.

- Share changes in search engine friendly pagination practices among retailers during Covid.

- Provide up-to-date tips and resources on how to make your pagination indexable without index bloat concerns

- Lastly, show that brands don’t need to (and shouldn’t) wait for a complete guide from Google before acting on the indexability of the products that sit exclusively within their pagination – everything brands need for pagination has already been published by Google, as well as other trusted sources.

TL;DR Pagination Best Practices

If you want this article in presentation format, here’s my BrightonSEO 2020 Presention – Thank U (Rel) Next – State of Retail Pagination

Whilst we did get the Google pagination best practices (finally) in 2022, many of these echo my best practices from research and observation 3 years prior which are outlined in this presentation, which were also reviewed by googler John Mueller before my BrightonSEO talk in 2020.

To make your pagination search engine friendly, here’s the quick edition of these best practices

- Your paginated urls must be unique: Whether via parameters or permanent URLs e.g. /category-page?page=2 or /category-page/page2

- Your paginated urls must be crawlable: Avoid using fragment identifiers (#) to generate the pagination which prevent Google from crawling and indexing the pages. This is best achieved via generating and linking the pagination via clean ahrefs from the website structure.

- Your paginated urls must be indexable: Don’t block it by robots.txt or meta robots, canonical to self + link from website – your paginated pages should have a canonical to self, index, follow, and not included in your robots.txt file – as you would do for any other page you wish to properly index its content.

- Think JS turned off – it’s 2024, but search engines still struggle with Javascript or, best case, still take longer to crawl, render and index it, which makes it very challenging for ecomms with ever-changing product catalogues and stocks. Make it easy on them, and make sure you have a non-js enabled version of your pagination. It can be a page with all products in one page for infinite scroll/load more websites, you can serve traditional pagination when JS turned off with hrefs links or you can serve your JS on the server side vs client site.

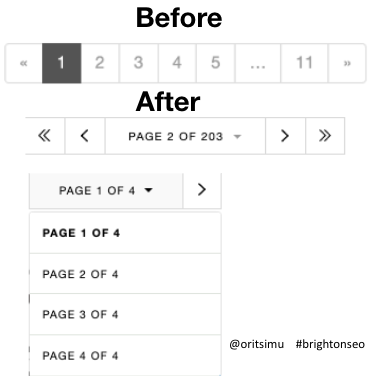

- De-optimize your paginated pages to decrease the chances of these showing up in search – make sure that the 1st page of the series (i.e. the category page) is the most optimised for SEO by adding unique page content, internal links & meta tags. If you de-optimize the rest of the pages in the series (by removing those links/content/meta tags) Google will get a clear message of what to rank.

- Take control of your crawl budget – making your paginated urls crawlable, indexable and unique, that doesn’t mean that every other filter on your site (e.g. faceted navigation) should be. Save search engines’ time by blocking non-pagination filtering parameters on robots.txt. If you do want your pages to appear in search for colour or size – create collection pages (e.g. red dresses) that would get internally linked from the main category (e.g. dresses). The chances of seeing a deep filter with product type and colour (and even deeper if size, style, fabric etc is added) to rank or even outrank your biggest competitors are very small. Prioritise the creation of these collections by sales, internal site searches, revenue and, of course, search volume, search trends and seasonality.

- Crawl your website after every change, fix and repeat – the only way to find out if too much or too little is getting crawled by Google is to crawl the website after every single page to make sure that the crawling restrictions you’ve put in place are delivering the expected results. I recommend looking at the enterprise crawler that works for you (for this research and work I’ve used Lumar (previously DeepCrawl) but choose the one that’s a combination of powerful but also easy to understand, because otherwise there’s no point in the tool at all. If no enterprise crawler is available, have trusted old Screaming Frog handy.

- Search friendly pagination UI adjustments – get your product and developer teams on board – build your case thoroughly and make sure you speak their language (low effort, high impact). Whilst some product/tech people might say these cannot be achieved without compromising on UX – they can and have. It’s all about working together to find a middle ground that works for everyone, as you will see in the case study below. There’s no point in pretty graphics when search engines would struggle show your products in search. I’ll go further than that and say that crawlability and indexability are a ranking factor, as the results of the case study below will show, helping a small-medium business rank very high for their biggest head terms with little to no links or digital PR efforts. For ecommerce websites – the easier you make it for Google to rank, the higher you get, which is a great way to get ahead of big competitors in your space

- Big brands are not a synonym for best practices – you might look at this work and say – but X is a huge brand and they don’t do it, or X do the opposite, well, after having worked on big brands over the years I can tell you (unless you didn’t know this already) that getting tech resources prioritised in corporate environments is incredibly difficult. You can be the most eloquent, build your case study, your matrix of priority, calculate the revenue and rankings increase, show impact on competitors – but none of that would work if the business have something even more important they need the resource for. So the fact that you see (wrong/broken) things implemented on bigger competitors doesn’t mean you should copy them blindly. Every site has its own set of circumstance and requires a different bespoke solution, so test, crawl and experiment to see how things would work on your brand.

- Opt for gradual rollout – if a/b testing is not available via an SEO testing platform, try to limit this change to certain sections of your site if possible first to have a solid ground post fixes and tweaks to roll out on the rest of the site.

Deep-dive and case study: What is Search Engine Friendly Pagination and Why Does it Matter?

The vast majority of sites opt not to showcase 100% of their offering on a single page due to the impact on page load times (unless optimized to perfection) and UX related factors.

This is particularly true for eCommerce sites with hundreds and sometimes thousands of products that retailers opt to spread across several pages to allow a better experience for the user.

Pagination by Type

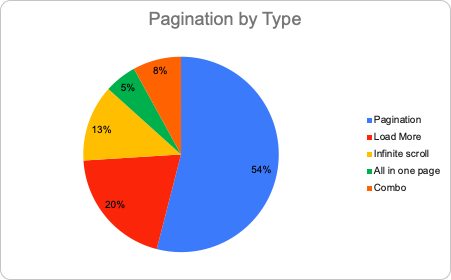

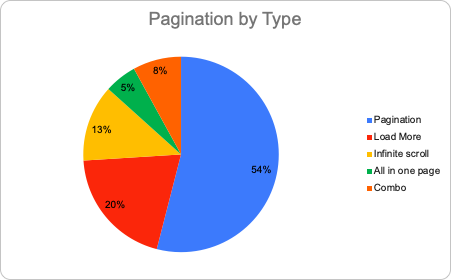

According to my research into the top 150 UK fashion retailers pagination practices, these are the most common pagination types retailers opt for:

- Pagination – moving from one page of results/products to the next via clicking on page numbers e.g 1 2 3 >>

- Load more – when the user scrolls down until the end of the list where there is a “load more” button which will add on to the page the next set of results (also called “lazy load”

- Infinite scroll – when no action from the user is required to load the next set of results. As soon as they scroll down to the end of the results, the next set appears, mimicking an endless scroll experience

- All in one/no pagination – when all the results are loaded on the same page and pagination is not effectively used

- Combo – when more than one pagination type is used. E.g. Pagination when javascript is turned off (in the case of search engine crawlers which do not know how to process JS like Baidu), and infinite scroll when JS is activated. Or simply two different sections of the website use two different pagination types.

When only a fraction of the products are shown on the first page of a category, it becomes increasingly crucial to create a clear pathway for search engines to crawl and index 100% of the products, and the best way to achieve this is via search engine friendly pagination. That is, making sure that the pagination of the website is both crawlable and indexable.

Rel=Next/Prev: The Outdated Implementation

If a brand was worried about the paginated pages themselves getting ranked, they could use the rel=next/prev tag, hinting to Google on what the next page is without necessarily having to index the paginated page itself. But those days were officially over on March 21, 2019 (and probably sometime before that) when Google made their official announcement on the deprecation of rel=next previous in response to questions from the SEO community.

The New Google-Recommended Solution: Search Engine Friendly Pagination

On March 22, 2019, John Mueller makes the 1st recommendation on pagination post rel=next/prev deprecation in a Google Webmaster Hangout:

I’d also recommend making sure the pagination pages can kind of stand on their own

I.e: just like any other page on your site that stands on its own – with its own URL, not blocked from search engines and canonical to self – this is how you should think of your pagination. The full best practices reinforcing this further have been published in September of 2022.

New Pagination, New Concern: How to Avoid The Paginated Pages from Indexing?

From talks with different SEOs, it seems like immediate concern about making paginated pages become indexable, they might start cannibalizing their search results.

In fact, the effort to make any changes in pagination didn’t even seem worth it to many.

Hello yet again fellow organic search aficionados (I try to mix it up) kindly help me yet again to fill those #brightonseo slides (Almost) a year has passed since the announcement of rel=next/prev deprecation by Google. Have you changed your approach to pagination for #seo?

— Orit Mutznik (@OritSiMu) January 22, 2020

When it comes to pagination, it’s important to weigh in risk vs reward. If you block your pagination from being crawled and indexed by search engines, yes, you won’t see “page 1” in the search results. But on the other hand, you potentially block from crawling and indexing thousands of pages which could drive a significant revenue stream for your site from search.

Tip: You can get the best of both worlds: The products that exist on your pagination only will be indexed and Google will only rank the first page of the paginated series (i.e the category page) If you make your pagination indexable and make sure that the 1st page of the series (i.e. the category page) is the most optimized for SEO by adding unique page content & meta tags. If you de-optimize the rest of the pages in the series (by removing those content/meta tags) Google will get a clear message of what to rank.

The Impact of Non-Search Engine Friendly Pagination

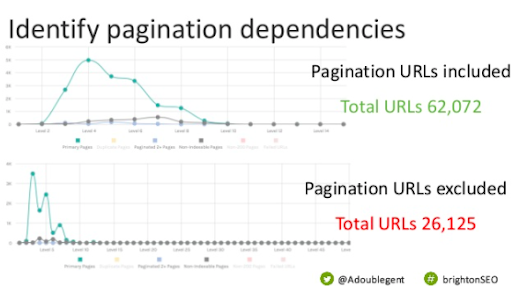

According to Adam Gent’s research The State of the Web: Search Friendly Pagination and Infinite Scroll published in April 2019, shortly after the rel=next/prev depreciation announcement, “Some sites lost 30% – 50% of their pages when excluding paginated pages from being crawled”.

The State of Pagination & Infinite Scroll – BrightonSEO April 2019 – Adam Gent from DeepCrawl (Since rebranded to Lumar)

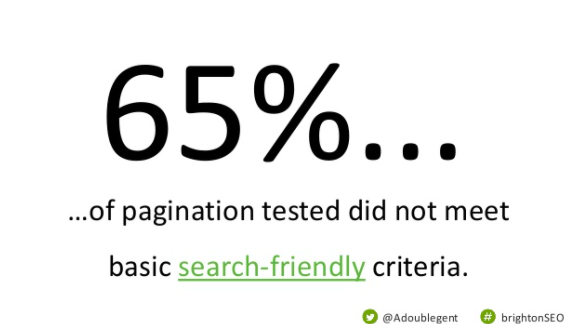

This research was conducted on the top 150 Alexa sites (RIP) and showed that 65% of the analyzed sites opt for non-search engine friendly pagination practices.

These results also resonate with eCommerce sites as well, as the following case study and research will show.

Tip: If you flip the coin and spot the opportunity, that is your chance to potentially grow your organic visibility by 30%-50%, as you’ll be increasing the arsenal of pages that are available for Google to rank your brand on a variety of search queries.

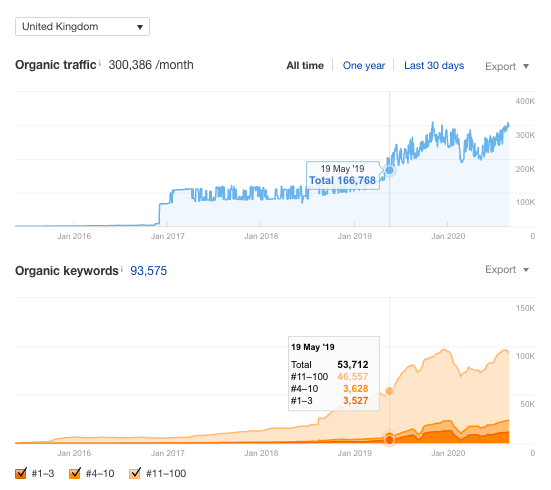

The Impact of Moving to Search Engine Friendly Pagination in eCommerce: A Case Study

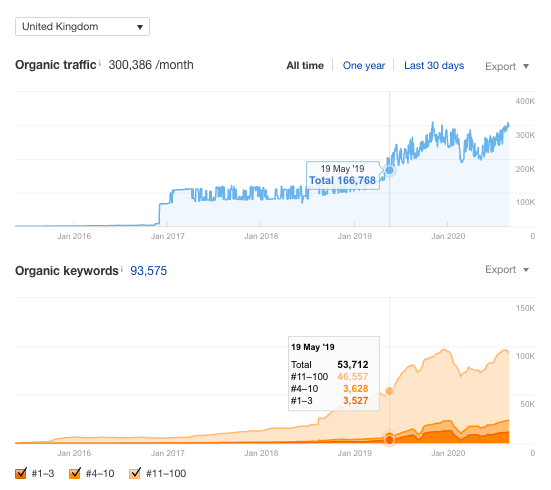

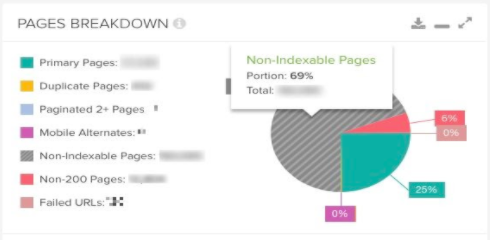

Whereas the average of content blocked by non-search engine friendly pagination on Alexa’s top 150 sites is between 30%-50%, in the case of women’s fashion brand SilkFred this number reached 69% at the beginning of 2019, circa the rel=next/prev deprecation.

This effectively means that most products on-site actually exist in the paginated pages only and there was no way for Google to crawl and index them when the paginated pages are not crawlable and indexable.

This also means that only 31% of the website is being properly served to search engines.

Following Adam Gent’s State of Pagination BrightonSEO 2019 talk which was followed by the publication of DeepCrawl’s Search Engine Friendly Pagination Best Practices, this ambitious brand saw the organic growth opportunity and the wheels have begun to move in motion.

The Case Study: Meeting the Search Engine Friendly Criteria For Pagination

This means essentially what a URL would need to have to “stand on its own” and become indexable.

- URL Uniqueness: Whether via parameters or permanent URLs, the paginated page URL has to be unique and change as you go through the pages. e.g. /category-page?page=2 or /category-page/page2 or All in One (no pagination)

- Crawlability: Avoid using fragment identifiers (#) to generate the pagination in order to enable Google to crawl and index the pages. This is best achieved via generating and linking the pagination via clean hrefs from the website structure.

- Indexability: Not blocked by robots.txt or meta robots, canonical to self + link from website – as you would do for any other page you wish to properly index its content.

SilkFred Pagination Setup Benchmark, May 2019:

Surprisingly, this setup is really common among retailers who really do not see the connection between pagination and search engine visibility. And this is where you as the SEO comes in.

| Canonicalisation | Main |

| URL structure | #? (Client Side Rendered JS) |

| Crawlability | No |

| Indexability | No |

| Crawl depth | 50+ Pages |

| Indexable Percentage of site: | 31% |

3 Reasons Why This Is Bad for SEO

- Canonical to main:

“Specifying a rel=canonical from page 2 (or any) to page 1 is not the correct use of rel=canonical, as these are not duplicate pages. Using rel=canonical … would result in the content on pages 2 and beyond not being indexed at all.” 5 Common Mistakes With rel=canonical, Google Webmaster Central - URL Structure using fragments:

“Fragments aren’t meant to point to different content, crawlers ignore them; they just pretend that the fragments don’t exist,” Splitt said. This means that if you build a single-page application with fragment identifiers, crawlers will not follow those links.” Martin Split, Google Developer Advocate - Relying on Client Side JavaScript to render pagination:

Not all search engines can render JS and despite Google’s increased client-side JavaScript rendering capabilities, the official Google recommendation for rapidly changing sites is to render content on the server side or provide a static HTML alternative. In addition, to give your brand a chance to rank in alternative search engines, consider the fact that with the time it takes the product to be fully rendered that product could go out of stock or out of shop. According to research conducted by Onely, after 14 days 40% of JS content is still not indexed, making this a real possibility.

With this setup in place causing a depth of over 50 layers and only 31% of the website being indexed, this served as a strong case to make the necessary changes to impact this online fashion retailer’s organic visibility significantly through the use of search engine friendly pagination.

Implementing Search Engine Friendly Pagination: The Results – 1Y Later

The following changes have been made to the SilkFred new pagination.

| Canonicalisation | Self |

| URL structure | /page/2 (SSR JS) |

| Crawlability | Yes |

| Indexability | Yes |

| Crawl depth | 7 pages (two extremes*) |

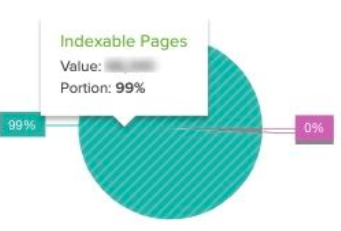

| Indexable Percentage of site: | 99% |

Tip: Accompany your pagination changes (in the backend) with UX changes (in the frontend). Involving UX designers and considerations will make the new pagination as accessible to users as it is to search engines, making it much easier to use.

3 Reasons Why This is Good for SEO

1. Canonical to self:

Allowing the content of the paginated page to get crawled and indexed does not mean that it will be ranked. If you strengthen the signals of your category page, you can rest assured that your paginated pages will not rank and you will benefit from so many new products that were previously undiscovered to form part of your new and improved organic visibility.

According to this Twitter poll, the SEO community conquers:

Ahead of my upcoming #brightonseo talk about the state of search engine friendly pagination

Survey question:

If you worked on #seo friendly pagination this year did you canonicalize the paginated pages to main or to self? if so why? Happy to credit best answers on my slides????— Orit Mutznik (@OritSiMu) December 3, 2019

2. URL Structure using permanent URLs:

Though you can also use parameters to create unique URLs, the permanent URL solution allows you not to fall under any parameter rules set up in Google Search Console or fall under any exclusions you might have in robots.txt. However, if you make sure that the pagination parameters are unblocked and not treated as the rest of the parameters in Google Search Console, parameters can also be used for this purpose. A unique, self-canonical URL that’s linked via a clean href from the website is a link that Google can crawl and index its content.

3. Serving the content on the server side:

The use of JS is only increasing and it’s hard to find a brand that can avoid this for the sake of SEO, but brands don’t have to avoid it. Providing a static HTML alternative and serving the content on the server side makes it fast and easy for Google to render and index and provides alternative search engines with a way to understand your website.

With the combination of these 3 main changes, the percentage of indexable pages grew from 31% to 99%.

Post Release Woes

1. A Word On Site Depth And Website Architecture: Too Deep or Too Flat?

Pagination is one of the main reasons why websites can reach great depth, meaning that many products can only be found after users and search engines alike go through great depth in order to find them.

In his research How Site Pagination and Click Depth Affect SEO – An Experiment, Matthew Henry from Portent have looked at how “small changes to your pagination can have a surprisingly large impact on how a crawler sees your site and all that wonderful content” and this is exactly what was done here.

With the implementation of these changes, the site depth has changed dramatically from over 50+ to 7+, which, after having a chat with John Mueller on the subject looks like going from two extremes.

Maybe you went from too deep to too flat? If everything is a few clicks away, then how would Google know which pages are more important?

John Mueller

This is a very important point to consider when making such a change and the reason why though these changes had a very positive impact on SilkFred our work here is not over, and optimizations to the new pagination will continue.

On a positive note, this “flat” website architecture allows newly added products to get indexed within seconds, so it’s not all bad.

2. 1st iteration of new pagination was via client side JS

Though I was unsure at that point how quickly Google would index the new pagination being rendered on the client side, I was surprised to discover that within a few days approximately 70% of the new pagination was properly indexed within a few days. The full transition to server side JS was complete within a week or two, pushing organic visibility even further.

3. Main page content duplicated across paginated pages

As SEOs, one of the things we simply cannot tolerate is duplicate content, and discovering that the main page content is duplicated across the entire series of paginated pages was a big red alert.

However, this is not as bad as you may think, since we don’t want new pages duplicating the content of a well-established page because they won’t rank and in this case, we don’t want the paginated series to rank anyway. But this has also been fixed, and the duplicate content on the paginated pages has been removed. None of the paginated pages rank or outrank a category page.

4. Empty paginated pages

An important consideration to keep in mind, especially for a dynamic eCommerce site with products getting added and removed constantly – when you index your paginated series, provide a dynamic solution for the last page in the series, which is something that I admittedly did not consider.

Considering both user and search engine aspect, the chosen solution was to 404 the last page of the series if it becomes empty. It’s not a 410 because that page might come back again, and not a 301/302/307 because users won’t understand why they have suddenly been redirected. But surely more solutions can be implemented to solve this problem.

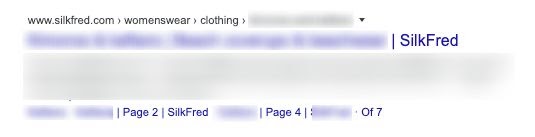

5. Paginated pages showing as sitelinks

A side effect of creating a cluster of strongly interconnected paginated pages, all on an equal level.

How to overcome this problem?

- Implement navigation schema on the “real” inner links of the page

- Implement the relatively new data no snippet tag to block the pagination section from appearing on the SERP snippet

I can confirm that this combination works, and the paginated pages no longer appear in the snippet.

Despite these post release woes, the results speak for themselves, with the date on the graph being the release date for the new pagination project:

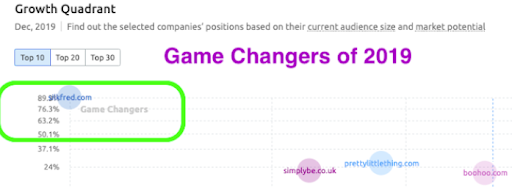

eCommerce Pagination Research 2020: How do UK Fashion Retailers Measure Up 1 Year Post-Rel=Next/Prev Deprecation?

After working on the search engine friendly pagination project and seeing the impact it had on SilkFred, I was curious about my competitors in the women’s fashion industry to see how/if they reacted to the rel=next/prev deprecation – did they see the opportunity the way I saw it? The short answer is. Most didn’t, no.

eCommerce Pagination Research Methodology & Findings

- Crawled & reviewed the top 150 Women’s Fashion Retailers in the UK: JS Enabled vs Disabled (SEMRush, Screaming Frog, DeepCrawl)

- Categorized each site based on the type of pagination they are using (Pagination, Load More, IS, etc)

- Used DeepCrawl’s Search Friendly Pagination Benchmark: URL uniqueness, crawlability & indexability

Goal: To determine who also saw this as an opportunity to improve product accessibility, indexability, and website architecture.

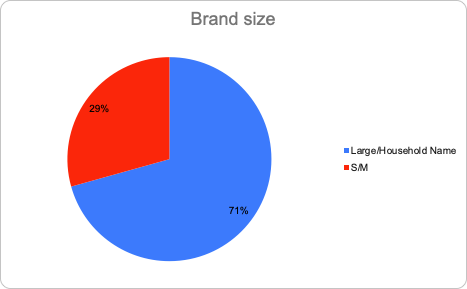

Researched Brands by Size

From a search visibility point of view, it’s no surprise that the most visible brands are also the biggest, according to SEMRush*

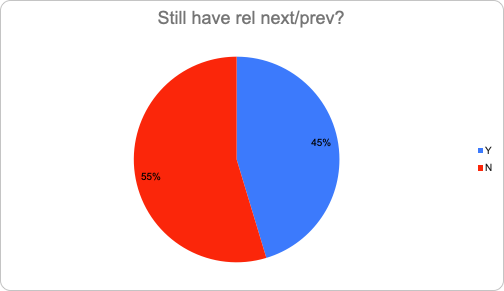

How many still have rel=next/prev in their code?

Though most have removed it, it’s evident that the SEO community is divided about this:

Why would they remove it?

- No longer required by Google

Why would they keep it?

- Other SEs+W3C need it*

- No resources

- They don’t care

*Lily Ray, Path Interactive – How to Correctly Implement Pagination for SEO & User Experience

The Most Popular Types of Pagination Used by UK’s Top Women’s Fashion Retailers

Pagination is the most common option retailers opt for. Followed by “load more” (lazy load), infinite scroll, no pagination (all in one), and a combination of more than one form of pagination.

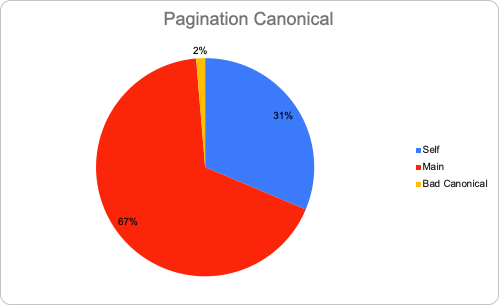

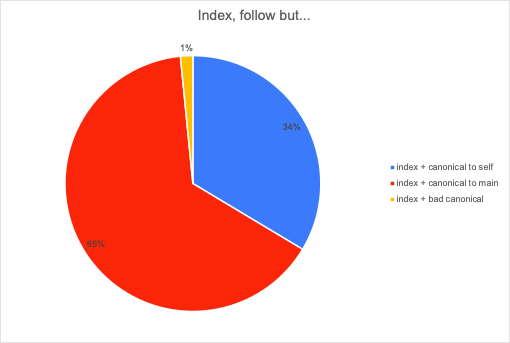

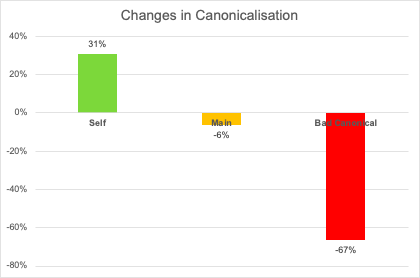

Canonicalization

Despite the fact that Google clearly marks canonicalization to main as one of its 5 common mistakes with rel=canonical and overall agreement among the SEO community on the right approach being a self-canonical for each page in the paginated series, most UK women’s fashion retailers canonicalize their paginated series to the first page. This means that they actively prevent products that exist only in one of the pages of that series from being indexed.

Anything that is only contained on the non-canonical versions will not be used for indexing purposes.

John Mueller, Google Webmaster Office-hours Hangout Notes 7 Feb 2020 also on YouTube.

If pagination is deindexed: Are products in sitemap enough?

Using a sitemap doesn’t guarantee that all the items in your sitemap will be crawled and indexed, as Google processes rely on complex algorithms to schedule crawling.

In conclusion: Do not rely on your sitemap for indexing products that only exist in non-indexable pagination.

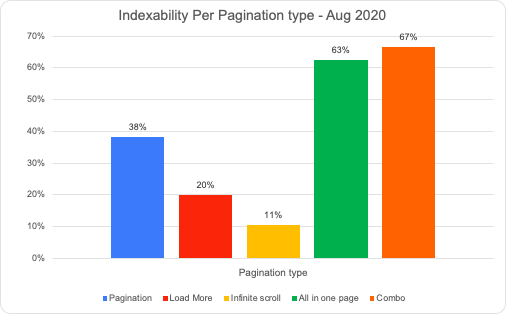

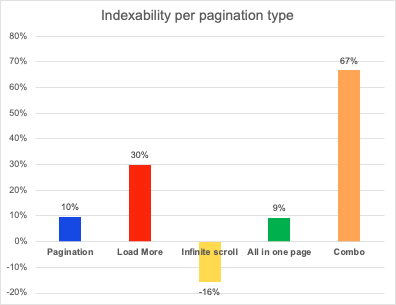

Indexability Per Pagination Type

Despite the existence of Google guidelines for SEO-friendly Inifinite Scroll Sites from 2014, infinite scroll is the biggest loser when it comes to indexability and SEO friendliness.

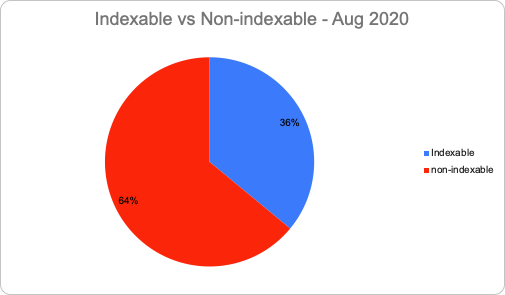

Total Indexable vs Non-indexable

64% of the UK’s leading women’s fashion brands opt for their pagination not to be discovered by Google, despite the number of products that may exist only there.

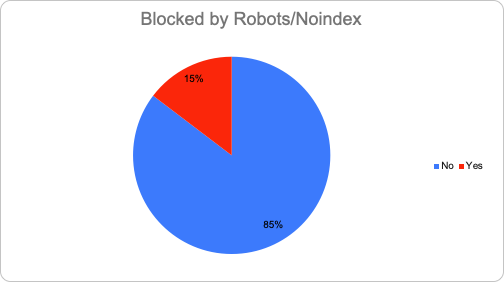

Blocking Pagination From Search Altogether

15% of brands block their paginated series all together via robots.txt or meta robots noindex.

What About Using Noindex Follow?

Some retailers probably think that using noindex follow on the paginated series will be used as a hint to Google to crawl the links in the paginated pages but not index pagination itself. They would be wrong.

Noindex and follow is essentially kind of the same as a noindex, nofollow.

John Mueller, Google, Google Webmaster Hangout 15 Dec 2017

18% of sites no index follow their pagination.

On the other hand, index follow is not enough

By index follow + canonical to main on all pages of the paginated series, you accomplish the same outcome as noindex nofollow on the paginated series, as Google will not use the content of the canonicalized pages for indexing and ranking.

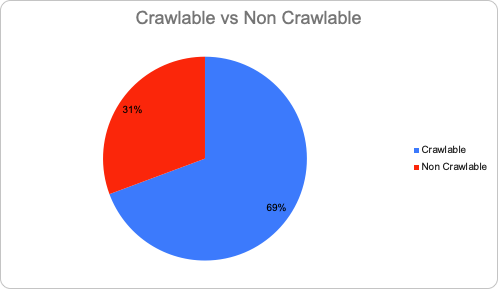

Indexability Aside, What About Crawlability?

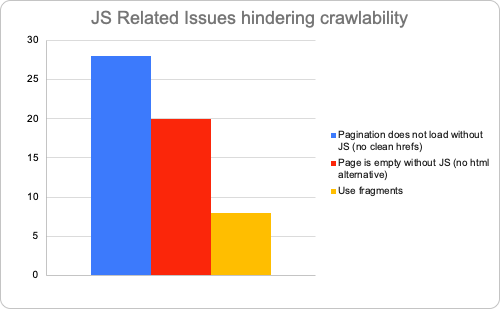

31% of the top UK women’s fashion retailers suffer from JS-related issues, preventing their paginated series from being crawled and indexed.

But hasn’t Google advanced significantly in its JS rendering?

- As previously mentioned, not all crawlers are able to process JS successfully or immediately

- Providing a non-JS alternative for rapidly changing sites or other crawlers is recommended by Google

- Google cannot crawl or index fragment identifiers – absolute URLs are still a requirement for paginated content

- Content served via infinite scroll specifically has SEO friendly guidelines since 2014, that require unique URLs and content accessibility when JS is disabled

JS-Rendered Pagination Tips:

- Don’t waste Googlebot’s time forcing the door open just open the door & let it in via server side JS or a static HTML version.

- Don’t let your content expire by the time Google renders and indexes it after two waves of JS indexing if your website inventory changes quickly

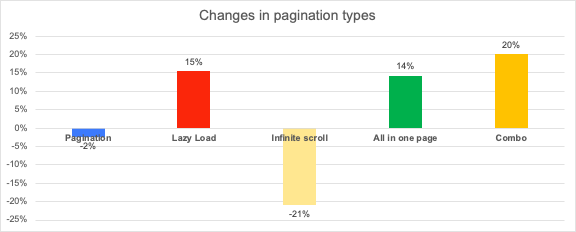

Has The Approach To Search Engine Friendly Pagination Changed During COVID?

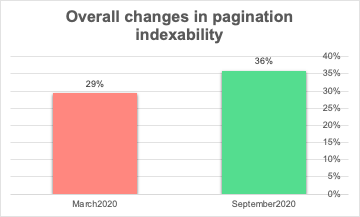

Surprisingly, the short answer is yes. It seems that from March-September 2020, some of the biggest retailers made changes in this area, but not only the big ones.

Good News: Overall Positive Changes in Pagination Indexability

- SEO friendly pagination has increased by 23% since March 2020

- From N to Y:

- 29% were S/M Brands

- Very big brands made changes

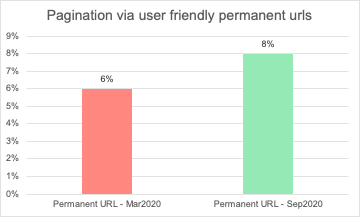

Good News: The Use of Permanent URLs for Paginated Series is on the Rise

This is a positive direction to avoid mixing pagination parameters with other parameters retailers need to keep blocked to keep their crawl budget in check.

Good News: Brands Adhering to (Pagination) Canonical Best Practices

Though things are probably slow-moving for large retailers, it’s looking like things have begun to change and retailers are giving more priority to their organic visibility during COVID.

Death to Infinite Scroll?

Brands seem to be abandoning infinite scroll the most as a way to set up their pagination, some are testing it with other pagination practices. This probably stems from the difficulties in indexing it.

The Google guide to making infinite scroll search engine friendly can definitely help retailers opting in for this approach.

Unfortunately, this is consistent with the growth in the number of brands implementing infinite scroll badly.

More Changes in Pagination Practices for eCommerce Brands During COVID

- The number of brands adding “noindex follow” to their pagination has increased by 20% (a total of 18% of brands)

- The amount of brands with rel next prev in their code has increased by 6% (45% of brands)

- Crawlability numbers remain unchanged, same issues

The Unexplained: Changes in Pagination Practices With Unclear Motivations

- Add rel next previous to a pagination blocked by robots

- Change canonical to self but add noindex (follow) on pagination

- Move to infinite scroll without unique URLs for pagination

- Fix bad canonical, point it to main

- Change pagination type without looking at SEO friendliness or redirecting old to new

The Conclusion Remains, despite some improvement trend during COVID:

64% of women’s fashion brands in the UK do not meet the search-friendly pagination criteria

Consistent with Adam’s findings of 65% in 2019 for Alexa’s top 150 sites

Search Engine Friendly Pagination FAQs

1. What do you need to leverage your pagination for increased search engine visibility? Meet the Search Engine Friendly Criteria:

- URL Uniqueness: /category-page?page=2 or /category-page/page2 or All in One (no pagination)

- Crawlability: Avoid using fragment identifiers (#), use clean hrefs from the website structure.

- Indexability: Don’t block by robots.txt or meta robots, canonical to self, and make sure your pagination is properly linked from the website – as you would do for any other page you wish to properly index its content.

2. Will my paginated pages start ranking for my key phrases, cannibalizing my category page? While this is a possibility, it is of slim chances if you do this right:

- Make sure you maximize all SEO signals on the first page of your paginated series (i.e. the category page) – i.e. optimize your meta tags & include unique quality content

- “De-optimise” the pages in your paginated series. I.e. don’t include any content and include pagination information on your meta title i.e. Category | Page 2. Meta description is not necessary.

- Google will pick up those signals and understand which page it needs to rank. If it still ranks one of your paginated pages, this means that there’s something about that page that confuses Google into thinking it’s more important. Compare the pages and see what you’re missing. This is not a common scenario.

3. We can only implement our pagination via client side JS is that still ok?

While it’s not ideal, Google is improving the speed in which JS is rendered every day. 70% of my pagination was indexed within a few days of rendering it on the client side. This is a good interim solution but aim for server side + static HTML version to guarantee indexing and at a faster rate, especially for rapidly changing sites.

4. A flat website architecture following the implementation of search-friendly pagination is not ideal. What would you do differently?

This is correct, but I’m a firm believer that flat is better than too deep, at least temporarily, because this is not over. My recommendation would be to look at the different pagination test resources at the end of this article and try different approaches that would aim to satisfy both UX & SEO. Test until you find the sweet spot for your site.

5. Are there downsides to search engine friendly pagination?

It’s all a matter of whether your search engine friendly pagination has been implemented properly. I’ve struggled with some initial post-release woes but I provide details on how these were all solved (except for the site depth, which is getting an ongoing treatment). In general, the results make this effort absolutely worth it – for big eCommerce brands to increase their share, and for small brands as a tactic to compete with the greats.

6. Should you have all your paginated pages at the highest layer?

That’s how far I managed to go with my testing. My phase two would be to have the top 10-20 on the first level, and then adding the rest in deeper levels (recommended 2024 read on the topic: Paginated Headache for SEOs by Vincent Malischewski)

Search Engine Friendly Pagination Tips

1. Ask (crawl) yourself: Is there something unique that only lives on my pagination?

If the answer is yes – make your pagination stands on its own – crawlable, indexable, unique URLs, whilst keeping your crawl budget (params) in check.

2. Know how your website is inner linked. Are all of your products covered?

Use your pagination as an extension of your inner linking strategy.

3. How do you build a strong business case?

A simple crawl can help. Compare your crawl with JS enabled/disabled and know exactly which pages Google is missing out on due to non-friendly pagination.

4. Always think JS-disabled

Reminder: Not all search engines can crawl & render JS. Welcome crawlability with an indexable, inner linked, Html alternative or server side/dynamic rendered version of your pagination as a part of your solution.

5. This might not be applicable to all industries

If making pagination indexable means increasing the amount of true duplicate content into Google, this might not be the right solution for you.

6. Get a glimpse of my methodology:

Grab my template, and see how your industry measures up and how big is the opportunity: bit.ly/ecom-pagination-data

Useful Search Engine Friendly Pagination Resources

- The State of Pagination & Infinite Scroll – BrightonSEO April 2019 – Adam Gent

- The State of the Web: Search Friendly Pagination and Infinite Scroll

- Pagination & SEO: Ultimate Best Practice Guide

- How to Correctly Implement Pagination for SEO

- SMX Advanced: Thriving in the New World of Pagination

- Paginated Headache for SEOs – Vincent Malischewski (NEW!)

- JavaScript and SEO: The Difference Between Crawling and Indexing

- Is Google Ready to Catch Up with JavaScript?

- How to use pagination and infinite scroll on your category page SEO

- How Site Pagination and Click Depth Affect SEO – An Experiment (Portent)

- Infinite Scrolling, Pagination Or “Load More” Buttons? Usability Findings In eCommerce

- The Ultimate Pagination – SEO Guide (Audisto)

- Google Search for Developers, Dynamic Rendering Guide

- Infinite Scroll Guidelines, Official GWC, Feb 13, 2014

- “Google: Stop Using Fragment Identifiers (SER)

- 5 Mistakes Using Rel Canonical